Junbo Zhao (Jake)

Motto: 日拱一卒(Advance a little every day like a pawn in chess)

Assistant Professor, Zhejiang University

Director, Ant Group

Bio (2026 Version)

Junbo Zhao is an Assistant Professor at Zhejiang University (100-Young Professor Program) and a Director at Ant Group.

Current Focus (2025+): He focuses on two pivotal frontiers:

Next-Gen Scaling (dLLMs): Spearheading LLaDA 2.0 (100B MoE), a diffusion language model that matches AR performance with doubled inference speed.

The “Second Half” of Data: Moving from quantity to density. He explores high-leverage data strategies (synthesis, distillation, and evaluation benchmarks like HeartBench) to unlock efficiency.

Background: In 2024, he led the TableGPT project (TableGPT2 & R1), achieving over 200k downloads and widespread industry adoption in finance and manufacturing. He earned his Ph.D. from NYU under Turing Award laureate Yann LeCun. Previously, he contributed to foundational frameworks (PyTorch, FAISS) at Meta FAIR and developed the first end-to-end self-driving system at NVIDIA (showcased at GTC).

Impact: His work has garnered over 26,000 citations (as of Jan 2026). He is a recipient of the Zhejiang Province Science and Technology Progress First Prize (2024), the QbitAI 2025 AI Person of the Year, the WAIC Yunfan Award, and was featured on the cover of Forbes 30-under-30.

news

| Dec 23, 2025 | TableGPT-R1 fully released and opened. |

|---|---|

| Dec 10, 2025 | LLaDA 2.0 fully released and opened. |

| Dec 06, 2025 | HeartBench (Evaluating LLMs’ Anthropomorphicity) is released. |

| Aug 23, 2025 | Six paper were accpeted at EMNLP 2025. |

selected stuff

-

LLaDA2. 0: Scaling Up Diffusion Language Models to 100BarXiv preprint arXiv:2512.15745, 2025

LLaDA2. 0: Scaling Up Diffusion Language Models to 100BarXiv preprint arXiv:2512.15745, 2025 -

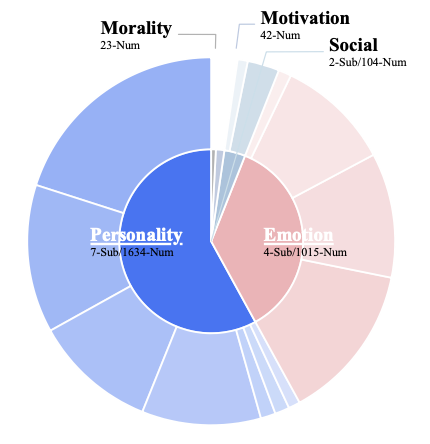

HeartBench: Probing Core Dimensions of Anthropomorphic Intelligence in LLMsarXiv preprint arXiv:2512.21849, 2025

HeartBench: Probing Core Dimensions of Anthropomorphic Intelligence in LLMsarXiv preprint arXiv:2512.21849, 2025 -

-

Every step evolves: Scaling reinforcement learning for trillion-scale thinking modelarXiv preprint arXiv:2510.18855, 2025

Every step evolves: Scaling reinforcement learning for trillion-scale thinking modelarXiv preprint arXiv:2510.18855, 2025 -

Tablegpt2: A large multimodal model with tabular data integrationarXiv preprint arXiv:2411.02059, 2024

Tablegpt2: A large multimodal model with tabular data integrationarXiv preprint arXiv:2411.02059, 2024 -

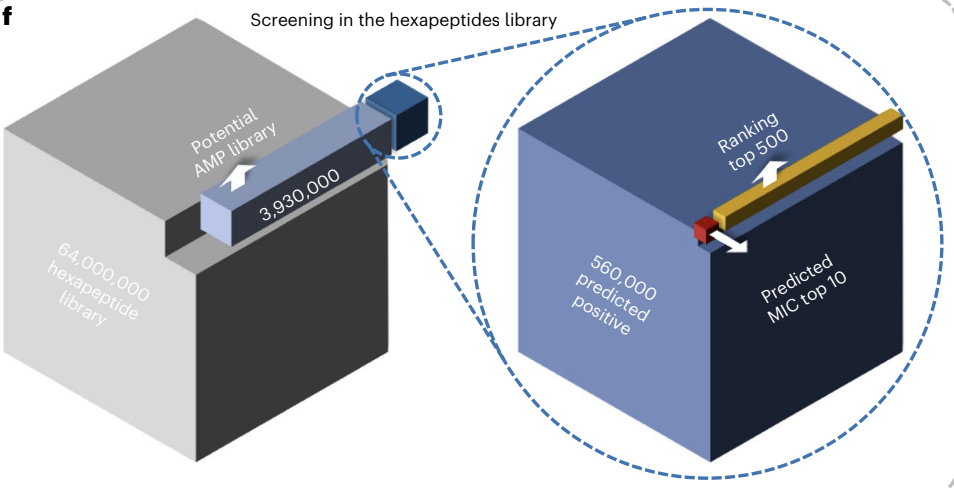

Identification of potent antimicrobial peptides via a machine-learning pipeline that mines the entire space of peptide sequencesNature Biomedical Engineering, 2023

Identification of potent antimicrobial peptides via a machine-learning pipeline that mines the entire space of peptide sequencesNature Biomedical Engineering, 2023